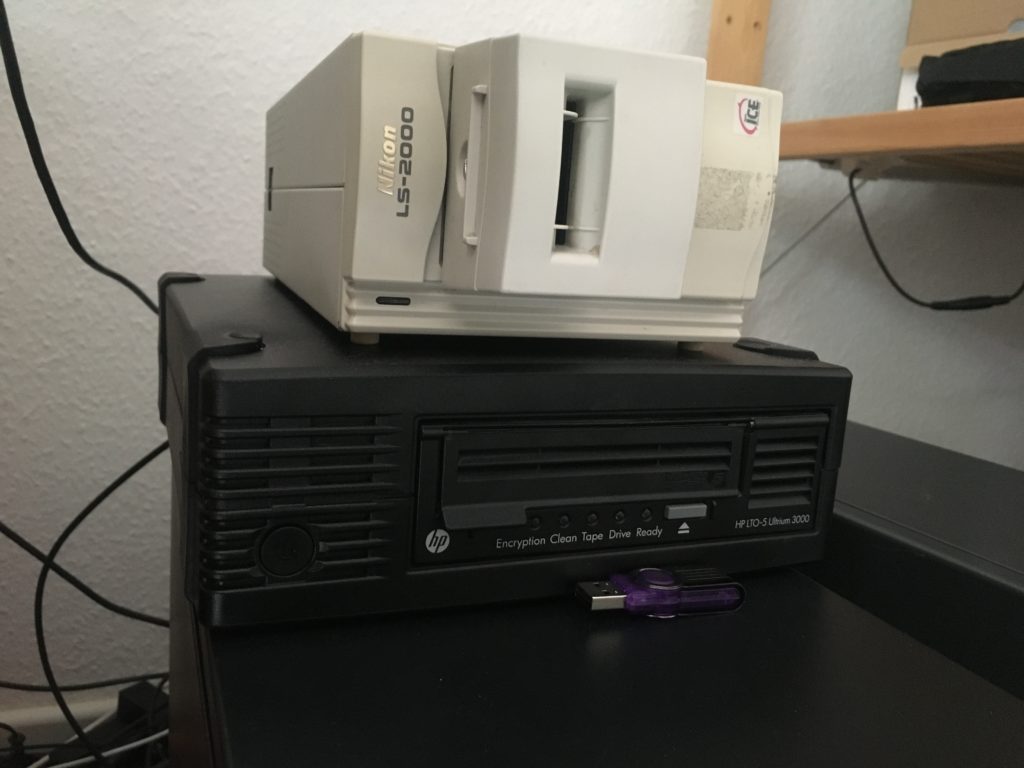

In the previous part, I learned how to write data to LTO4 tape, got frustrated and splurged on an LTO5 drive to take advantage of LTFS. The drive came – and it works! It even came with a free HP H222 SAS adapter and 2 new LTO5 tapes.

Yup, screams “serious business”.

Why LTFS is useful

- No more writing down which file was in which block on paper.

- No more waiting until one file is finished writing to start dd-ing the next file. I can copy bunches of files at once

- Folders! just like a USB or DVD.

- Windows has no software to read Linux tarballs, and Linux has no software to read backups made by Windows backup programs, but both can read/write LTFS.

Sounds like basic stuff but before LTFS, the only way you wrote to tape was through proprietary software you had to pay a lot for or use Linux’s tar+dd.

Listening to the tape drive, it seems using LTFS might wear out your drive earlier.

I used Windows 10 and staged the files to be backed up on a dedicated 7200rpm 1TB Hitachi hard drive.

I had a few hundred folders each with 1 700MB video file and 1 subtitle file. This means that no sooner does the tape drive reach full speed does it have to suddenly slow down and write a small file. So instead of seeing 140MB/s I was seeing 60-80MB/s, with the drive changing its speed all the time, and possibly even shoe-shining the tape a a bit as it rewinds back to where the smaller file should have been written.

After an hour of listening to this, I started to worry that I was causing more wear and tear on the drive than if I hadn’t used LTFS. So I made a huge tarball of my movies. But after going to the trouble of formatting the tape and making said .tar file, the tape drive still changed its pitch all the time.

Nevertheless, just make sure you only copy relatively large files to the tape, because the convenience of having folders is just huge.

After this 200GB tarball was written, I couldn’t open it and browse through the folders without the tape having to spend 3 minutes reading the entire .tar file. I reformatted the tape and went back to hearing my drive wear itself out, writing the small 700MB+1kB files individually to the tape.

In the end, the convenience of having folders and stuff was just too much for me to care about maximizing the drive’s lifespan. Just zip things up first when copying many small files like photos or your music collection.

To reduce the burden on the tape drive, don’t open files from the tape. Use ltfscopy for many files.

An 11GB .mkv movie required the tape to wind to multiple positions just to open the file, perhaps because the subtitles/chapters are stored after the video+audio data.

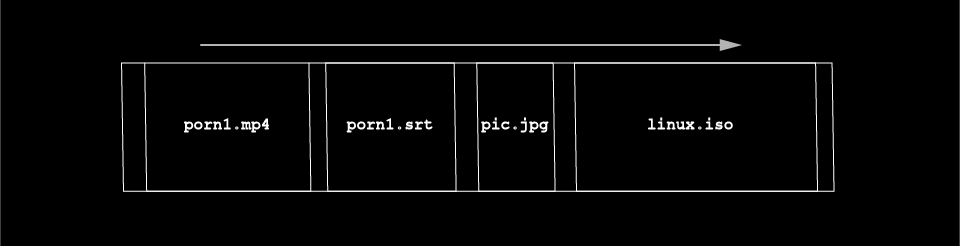

This only applies when copying lots of files off the tape: since the tape looks like a normal hard drive to Windows thanks to LTFS, Windows has no idea that the files on tape are actually written in this order:

It only knows that you sorted by name in Explorer (for example), so it will copy linux.iso, pic.jpg, porn1.mp4 and porn1.srt in that order, making the poor tape drive jump around a lot. Use ltfscopy, which should be in C:\Program Files\HPE\LTFS> and is smarter about such things.

dd + tar is still useful

Looking at the tape drive’s history with HPE Library and Tape Tools, it seems the last owner only wrote about 300GB at a time to LTO5 and also at 60-80MB/s. Apparently he didn’t lose much sleep over the fact that it was writing much slower than it could’ve (140MB/s) and that the drive might be wearing out faster.

If you have lots of data that you will most likely extract all at once, like backups, where you won’t individually pick and choose files to restore, then the old fashioned ways are still the best. You get maximum writing speed and you’re not wearing the drive out prematurely. Plus, formatting a tape with LTFS means you lose around 70GB of space.

I used the LTO5 drive to write to an LTO4 tape with dd+tar afterwards and was very relieved to hear it sound normal (maintain a consistent pitch). I think I’ll keep a VMWare image around just for this.

I am running into a different issue with my LTO6 drive. I did a server backup from the datacenter to my local NAS. Its from a web / mail / DB server. Roughly 2TB of data spread across over 3.5 million files. I dragged the whole thing to my tape drive. It started copying. On larger files speeds would exceed 100 MB/sec, and on slower files it might slow down to 20 MB/sec. But then about 1 million files into the process it started getting really slow. Where it would write a single file every couple seconds. These files are not large, small JPG maybe about 1 MB in size. Its now estimating it will take over 24 hours to write the remaining 2.5 Million files. Wish I could figure out the reason.